An analysis shows that information flow between individuals in a social network can be ‘gerrymandered’ to skew perceptions of how others in the community will vote — which can alter the outcomes of elections.

Democracy requires an informed electorate. However, some technological advances change how information flows through society, with serious consequences for the democratic process. Writing in Nature, Stewart et al.1 use experiments and computational models to uncover a previously unrecognized obstacle to democratic decision-making. When social networks become primary conduits of information, the pattern of network connections influences what voters believe about others’ voting intentions. This influence matters, because people shift their own perspectives and voting strategies in response, either through behavioural spread known as social contagion2 or on the basis of strategic considerations.

The Internet has erased geographical barriers and allowed people across the globe to interact in real time around their common interests. But social media is starting to compete with, or even replace, nationally visible conversations in print and on broadcast media with ad libitum, personalized discourse on virtual social networks3. Instead of broadening their spheres of association, people gravitate towards interactions with ideologically aligned content and similarly minded individuals. Portions of a social network can thus turn into ‘filter bubbles’4, in which individuals see only an algorithmically curated subset of the larger conversation. Filter bubbles reinforce political views, or even make them more extreme, and drive political polarization. Stewart and colleagues now describe a related, but distinct, way in which social-network structure can affect voting behaviour.

The authors examined situations in which two groups of individuals struggle over a contentious decision, under the spectre of gridlock. They developed a model of voter choice based on game theory — a theoretical framework for analysing strategic behaviour. They tested this model with 2,520 real people playing an online game in groups of 12. The model and the experiment shared the same rules: each individual had a preferred outcome, but all individuals preferred consensus, even on the less favoured outcome, to inaction.

Such scenarios are common. For example, in the case of the US government budget process, failure to pass a budget results in a harmful government shutdown. To avoid gridlock, it might make sense to vote against one’s preferred option, particularly as the threat of gridlock increases and the chance of winning declines. Therefore, avoiding gridlock requires information about how others will vote.

Stewart et al. envisage such information as being obtained through connections in a social network. In a ‘fair’ network, most people receive an accurate picture through their contacts about how others will vote. However, Stewart et al. discovered that, even without changing the number of connections that each individual has, networks can be rewired in ways that lead some individuals to reach misleading conclusions about community preferences. Ultimately, these misperceptions can even sway the course of an election. In this process, which the authors dub information gerrymandering, a network is arranged such that the members of one group waste their influence on like-minded individuals.

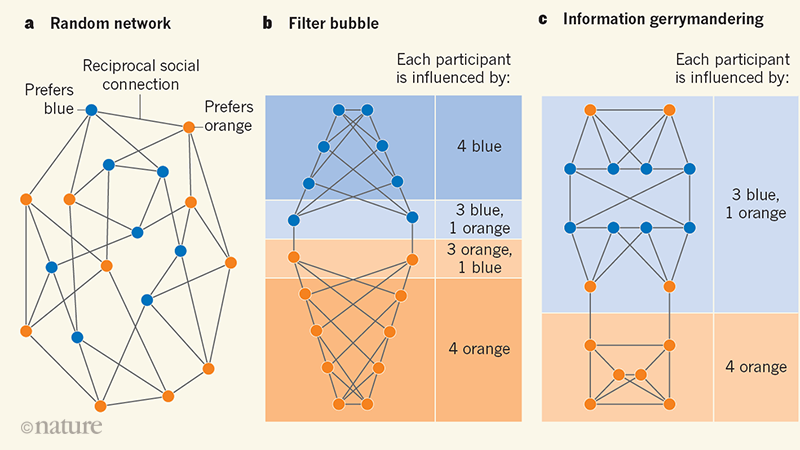

In geographical gerrymandering, the borders of voting districts are drawn so as to concentrate voters from the opposition party into one or a few districts, leaving the voters for the gerrymandering party in a numerical majority elsewhere5. In information gerrymandering, the way in which voters are concentrated into districts is not what matters; rather, it is the way in which the connections between them are arranged (Fig. 1). Nevertheless, like geographical gerrymandering, information gerrymandering threatens ideas about proportional representation in a democracy.

Figure 1 | Social-network structure affects voters’ perceptions. In these social networks, ten individuals favour orange and eight favour blue. Each individual has four reciprocal social connections. a, In this random network, eight individuals correctly infer from their contacts’ preferences that orange is more popular, eight infer a draw and only two incorrectly infer that blue is more popular. b, When individuals largely interact with like-minded individuals, filter bubbles arise in which all individuals believe that their party is the most popular. Voting gridlock is more likely in such situations, because no one recognizes a need to compromise. c, Stewart et al.1 describe ‘information gerrymandering’, in which the network structure skews voters’ perceptions about others’ preferences. Here, two-thirds of voters mistakenly infer that blue is more popular. This is because blue proponents strategically influence a small number of orange-preferring individuals, whereas orange proponents squander their influence on like-minded individuals who have exclusively orange-preferring contacts, or on blue-preferring individuals who have enough blue-preferring contacts to remain unswayed.

In Stewart and colleagues’ model, gridlock is assumed to be both likely and undesirable. It is unclear whether these assumptions apply in larger-scale decision processes such as national elections — in which gridlock is either extremely unlikely or impossible. However, these assumptions will often apply in other types of collective decision process, including those that transpire in boardrooms, among juries and through the halls of the US Congress. Indeed, the authors find evidence of information gerrymandering in the voting patterns of US and European Union congressional bodies, as well as in data from the US federal elections.

The assumption that gridlock is likely but undesirable does not apply to certain highly polarized debates in which opinions are strongly divided (for example, issues such as abortion, immigration, ethnic nationalism and the rights of people from sexual and gender minorities). In such cases, it might be that both sides would sooner see Solomon split the baby — that is, suffer a devastating deadlock — than concede. Nevertheless, more-general forms of information gerrymandering might be possible, even in these cases. For example, people are more likely to vote in elections that they believe to be close contests6. Network structures that skew perceptions of others’ voting intentions in a way that influences voter turnout by a particular group could be construed as information gerrymandering. The same could be said of network structures that drive asymmetric patterns of social contagion.

The implications of Stewart and colleagues’ work are alarming. In the past, information was disseminated by a small number of official sources such as newspapers and television stations, or through real-world social networks that emerged largely from distributed processes involving individual interpersonal dynamics. This is no longer the case, because social-network websites deploy technologies that restructure social connections by design. These online social networks are highly dynamic systems that change as a result of numerous feedbacks between people and machines. Algorithms suggest connections; people respond; and the algorithms adapt to the responses. Together, these interactions and processes alter what information people see and how they view the world. In addition, micro-targeted political advertising offers a surreptitious and potent tool for information gerrymandering. Alternatively, information gerrymandering might arise without conscious intent, but simply as an unintended consequence of machine-learning algorithms that are trained to optimize user experience.

At present, online social networks are not subject to substantive regulations or transparency requirements. Previous communication technologies that have had the potential to interfere with the democratic process — such as radio and television — have been subjected to legislative oversight7. We suspect that the social-media ecosystem is overdue for similar treatment.

Nature 573, 40-41 (2019)

References

-

Stewart, A. J. et al. Nature 573, 117–121 (2019).

-

Hodas, N. O. & Lerman, K. Sci. Rep. 4, 4343 (2014).

-

Shearer, E. Social Media Outpaces Print Newspapers in the US as a News Source (Pew Research Center, 2018); go.nature.com/2kgh7

-

Pariser, E. The Filter Bubble (Penguin, 2011).

-

Ingraham, C. The Washington Post (1 March 2015).

-

Pattie, C. J. & Johnston, R. J. Environ. Plan. A 37, 1191–1206 (2005).

-

Napoli, P. M. Telecommun. Policy 39, 751–760 (2015).